Search is a core function of most sites on the web. Users expect to find a search box at the top of each page to help them when the navigation fails. Often, it is the first tool users will reach for, even if the navigation is perfectly fine. It is therefore vital that search provides relevant results to keep users happy and moving to the content which best serves their needs.

Search results need to be relevant. This begs three questions about relevancy:

- How is it defined?

- How is it measured?

- How can it be improved?

This article will provide a framework for addressing each of these questions. The main focus will be on measuring and improving the results.

How is it defined?

What factors go into defining relevancy? Quick answer: it is not just the keywords the user has entered.

A broader concept of relevancy for the user can include:

- The semantic meaning of the keywords entered

- Recent context of search terms entered

- Recent browsing behaviour.

More generally:

- Recently added content on the site

- Popular content on the site

- Wisdom of the crown for lookalike users

And taking time and location into account:

- The time of day

- The season

- Geographic location

A lot is going on when it comes to considering the context the user is operating in. It is not just about searching for keywords.

This context and search intent then needs to be matched with content. This can involve finding relevant content via:

- The keyword relative density for the search term (Bayesian statistics)

- Semantic meaning of the content (embeddings)

- Popularity in terms of pageviews (user behaviour)

- Popularity in terms of backlinks (pagerank)

- Recency (date boosting)

- Whether it has been seen by the user already (user behaviour).

The retrieval of results is also complex if all things are considered. However, in practice, the scope of the problem will be determined by the technology which is being used. Technologies will be able to address parts of the relevancy problem in different ways:

- Traditional search can utilise keyword density (Database, Lucene)

- Google, whilst using many approaches, revolutionised search through the use of PageRank.

- Semantic search makes use of embeddings to determine the meaning of the query and the content

- Personalisation engines make use of user behaviour for recommendations.

When measuring the success of search results, the above concepts need to be considered. The strengths and weaknesses of each solution for the backdrop for assessing results.

Assessing results and the confusion matrix

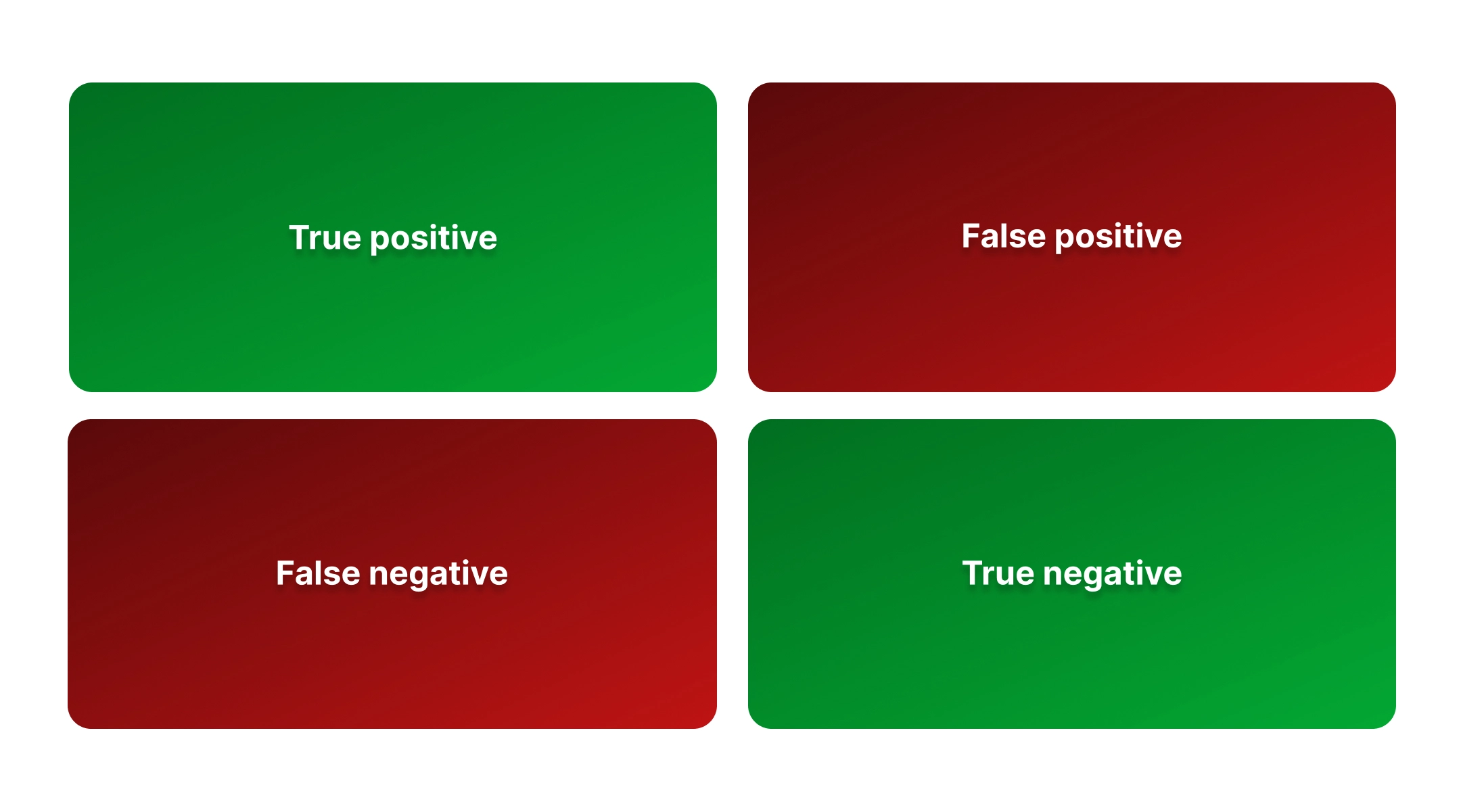

The measurement of success is more straightforward. For the results shown, does the user consider the result to be relevant or not? If yes, the result is a “true positive” (good); if not, the result is a “false positive” (bad). Relevant results, which are not shown, are” false negatives” (bad), and irrelevant results are “true negatives” (good).

The results and subsequent assessment divide the results into four groups, in what is called the confusion matrix:

- True positive: Correct item returned

- False positive: Incorrect item returned

- False negative: Correct item not returned

- True negative: Incorrect item not returned.

Search is an interesting problem, as users generally will not click through all pages of the results. We therefore need to consider the first page of results as a filter for what is considered to be found. It is therefore going to be likely that “false negatives” will continue to occur - not everything can fit on the first page. What we are therefore interested in is reducing the number of “false negatives”. Improving the quality of results comes down to assessing why bad results are showing and why good results have been pushed down.

Practical approaches to improving results

Now that we have a conceptual framework for understanding the quality of results, we can apply that to the implementation of the system, which will rank the results.

In collaboration with the site owner, and with reference to feedback collected in discovery, a “typical” set of search queries can be defined for different groups of users. This may include popular searches on the site or be targeted at areas where users tend to struggle.

Then for each query:

- Define a set of items which would be considered “positive” results. Ideally, these will have relevance across different dimensions (content, time, type). The number of positive results should match the number of results returned.

- Run the query and record the results

- Score the results based on the “recall” of the system. It is the ratio of True Positives to the number of results.

- Investigate the False Negatives and identify causal patterns (content, time, type).

The above steps can be iteratively run through, tweaking the setup each time, to improve the recall as best as possible.

When making the changes, it is important to keep moderation in mind. The system should not be optimised just to get the best results for the specific queries which have been selected. All changes should ideally help the operation of the system for all users.

A summary report should then identify areas where the search could be improved. Recommended improvements would aim to improve outcomes across all searches and not just problem-solve for a single use case.

Typical outcomes

Morpht will often run this kind of analysis on sites where the client is reporting that search is not working well. In many cases, we are using the Solr search engine, which is based on the open source Lucene project. Lucene can calculate relevancy based on relative keyword density and can boost results based on relevancy. It also offers good facetting ability.

With these technological constraints in mind, our recommendations generally revolve around tweaking the various options provided by Solr and Search API:

- The content being indexed

- Boosting on HTML elements

- Boosting on content type

- Boosting on recency

- Fuzzy search on phonetic typos.

This involves fine-tuning the various boosts in the search to improve the chances of getting relevant results. We generally can make significant improvements to the operation of the search, within the constraints of the technology.

The future

Relevancy is a complex area, and technology is developing rapidly. Older technologies based on keyword density will need to be enhanced to keep pace with the expectations of users. The development of semantic search databases with embeddings and the utilisation of user behaviour have taken search to a new level. More things will become part of the relevancy equation.

- User behaviour: Custom solutions such as Algolia and Recombee have taken a holistic approach, which incorporates user behaviour. The popularity of content and the preferences of the user are taken into consideration when returning results.

- Semantic search: New vector databases and embedding solutions provide bold new advances in identifying the meaning behind the words. Semantics are no longer just limited to a “bag of words” approach. meaning is derived from complex relationships found within the content.

We will examine these concepts further in a subsequent article.

Final thoughts

Search is complex. Users have high demands. Technology is improving. Can you keep up?

This article has taken a look at what goes into relevancy and how technological choices define the scope of the problem. The confusion matrix was offered as a tool which can be used to assess the quality of results. This tool can be used no matter what technology is being used. The key takeaway is that the measurement of results can lead to insights to drive better configuration of the technology to improve outcomes for users across a wide range of scenarios. Improving search is an iterative process which requires feedback from users and a mindful approach to the changes which can be made to improve the overall experience.